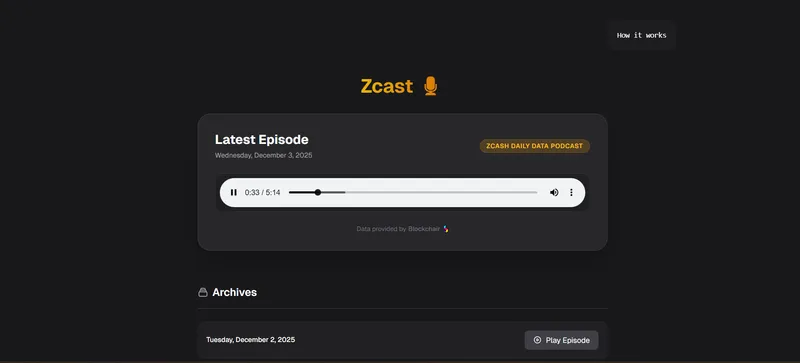

Zcast

Privacy data has a voice.

Awards

The problem it solves

Onchain data is powerful, but it’s also overwhelming. Understanding the real state of a blockchain like Zcash requires pulling daily data dumps, computing multiple metrics, tracking shielded vs. transparent flows, interpreting anomalies, and then translating all of that into meaningful insight. For most people, this is too technical, too time-consuming, or both.

Zcast eliminates this entire workflow by turning raw Zcash blockchain data into a daily AI-generated podcast.

Instead of manually checking explorers, dashboards, or spreadsheets, users get a 5 - 7 minute, two-host conversational breakdown of the most important network metrics from the previous day, automatically generated from real Zcash data dumps. It transforms complex onchain analysis into something you can simply listen to.

What People Can Use It For

-

Daily On-Chain Intelligence, Without the Dashboard Traders, investors, and researchers can stay informed on network activity, transaction behavior, fee trends, and large movements without opening a single chart or explorer.

-

Privacy & Shielded Pool Monitoring Privacy advocates and analysts can track how value moves between transparent and shielded pools through clear metrics like net shielded value flow—explained in plain language.

-

Automated Anomaly Awareness The podcast highlights unusual events such as fee spikes, transaction surges, hash rate shifts, or abnormal shielded pool movements that may signal upgrades, market reactions, or network stress.

-

Accessible Blockchain Education By using a natural, two-host discussion format, Zcast makes advanced on-chain metrics understandable to non-technical users who want to follow Zcash without learning how to parse raw blockchain data.

-

Passive Information Consumption Users can stay informed while commuting, working out, or multitasking—turning blockchain analytics into an effortless daily habit rather than an active research task.

Challenges we ran into

1. Raw PCM Audio Processing One specific hurdle was working with the Gemini 2.5 Flash TTS API. The model returns raw PCM (Pulse Code Modulation) audio data rather than a pre-formatted MP3 or WAV file. Initially, the generated audio files were unplayable in standard players because they lacked the necessary container headers.

How I got over it: I implemented a custom createWavHeader function in TypeScript. This function manually constructs the 44-byte RIFF WAVE header—calculating the correct block align, byte rates, and data sizes based on the 24kHz sample rate—and prepends it to the raw PCM buffer before saving. This ensured the output was a standard, playable WAV file.

2. Cross-Language Pipeline Orchestration Coordinating the Node.js application (Next.js) with the Python data science stack (Pandas/NumPy) presented environment challenges, especially when ensuring seamless execution across different deployment environments (Local vs. Replit).

How I got over it: I built a robust pipeline script daily-pipeline.ts that acts as the conductor. It handles the data fetching and file management in Node, spawns the Python process solely for the heavy numerical analysis, and communicates via a shared JSON interface. This allowed me to leverage Python’s superior data libraries while keeping the web infrastructure in TypeScript.